To entice those new customers, we began to capitalize on the cachet of the paint can. First off, the designers were a more difficult market because their demands upon the quality of the can, the manuals, and the software were much higher. To solve this problem, we had Steve Manousos, a veteran publisher with a great feeling for what looked professional. The manuals up to Painter 2.0 were written by Karen Sperling of Write-Design Studio and produced by Steve Manousos.

To entice those new customers, we began to capitalize on the cachet of the paint can. First off, the designers were a more difficult market because their demands upon the quality of the can, the manuals, and the software were much higher. To solve this problem, we had Steve Manousos, a veteran publisher with a great feeling for what looked professional. The manuals up to Painter 2.0 were written by Karen Sperling of Write-Design Studio and produced by Steve Manousos.In Painter 2.0, released in January 1993, the new features that caught the eye were:

- apply lighting

- glass distortion

- watercolors

- recording and playback of sessions

- brush looks

As you can see, we were adding features at a fantastic pace and so manual design, writing, and production went in-house. But how did I come up with these features?

I drew on my experience in ray tracing for both the apply lighting and glass distortion effects. I also used that expertise when coming up with the apply surface texture effect, by the way. In 1983-1985, I was busy constructing a hybrid ray tracer/shaded 3D modeler/hidden line system for AutoTrol Technologies. I have often mentioned my ability to think three-dimensionally. Well, it helped out considerably during those years. And, once again when it came to effects for Painter. I continued to draw on this knowledge when I constructed Detailer, the amazing 3D paint program.

Also, the watercolor brushes (which also had an additional layer of information) was jointly developed by Tom Hedges and Bob Lansdon during the Painter 2.0 timeframe. It is lucky indeed that they were so inclined. I later visited watercolors again in Painter 7, which used an unprecedented 4 layers of information to propagate its pigments through the capillaries of the paper grain, and which also used a cellular automata-based diffusion process to accomplish this while the user watched.

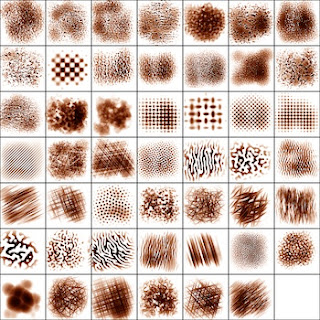

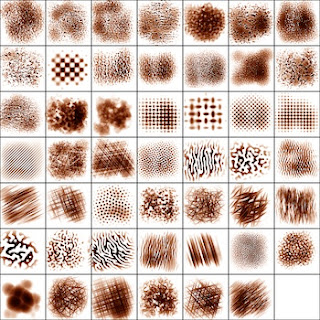

But before even all this, and before even Painter 2.0, I was advancing a secret internal tool, Texture. This was the tool used to produce nearly all of Painter's innovative textures.

But before even all this, and before even Painter 2.0, I was advancing a secret internal tool, Texture. This was the tool used to produce nearly all of Painter's innovative textures.

To right, you can see a test sheet with 48 textures, done in February, 1992. This showed that Texture was capable of producing speckle, z-buffered rendering, annealing, and anisotropic texture using Sequential Random Addition (coming in a future post, I promise!) even in early 1992. I recognized the need to provide textures early on, because scanning paper grain was so difficult and prone to flaws.

I drew on my experience in ray tracing for both the apply lighting and glass distortion effects. I also used that expertise when coming up with the apply surface texture effect, by the way. In 1983-1985, I was busy constructing a hybrid ray tracer/shaded 3D modeler/hidden line system for AutoTrol Technologies. I have often mentioned my ability to think three-dimensionally. Well, it helped out considerably during those years. And, once again when it came to effects for Painter. I continued to draw on this knowledge when I constructed Detailer, the amazing 3D paint program.

Also, the watercolor brushes (which also had an additional layer of information) was jointly developed by Tom Hedges and Bob Lansdon during the Painter 2.0 timeframe. It is lucky indeed that they were so inclined. I later visited watercolors again in Painter 7, which used an unprecedented 4 layers of information to propagate its pigments through the capillaries of the paper grain, and which also used a cellular automata-based diffusion process to accomplish this while the user watched.

But before even all this, and before even Painter 2.0, I was advancing a secret internal tool, Texture. This was the tool used to produce nearly all of Painter's innovative textures.

But before even all this, and before even Painter 2.0, I was advancing a secret internal tool, Texture. This was the tool used to produce nearly all of Painter's innovative textures.To right, you can see a test sheet with 48 textures, done in February, 1992. This showed that Texture was capable of producing speckle, z-buffered rendering, annealing, and anisotropic texture using Sequential Random Addition (coming in a future post, I promise!) even in early 1992. I recognized the need to provide textures early on, because scanning paper grain was so difficult and prone to flaws.

PainterX2, released in June 1993, was a version in between 2 and 3, that had some remarkable new features:

- layers

- layer palette with grouping of layers

- brushing on layers and their masks

- portfolio for storing layers that are used in a single project

- editable sessions

You may have noticed that in Painter 2.0, I started building a session recording and playback feature. This was continued in PainterX2 with the ability to edit the sessions. I literally documented the format so people could drive Painter using programs they wrote.

But the really big addition to this version was layers. We called them floating selections in those days, or (unfortunately) floaters for short. John Derry came up with the Portfolio concept and I implemented it. We also pioneered grouping, painting on layers and their masks, and a palette to access the layers. This was the first commercially-available program that featured this capability.

Almost simultaneously, veteran paint system pioneer Alvy Ray Smith produced a similar capability in his revolutionary Composer program from Altamira software. His was probably working before mine, so I would say that he gets the credit. Plus, he's a lot smarter than me.

Nonetheless, Fractal Design was advancing so fast and with the professional look and the quality of our product, it all made us look a lot bigger than we actually were.

But the really big addition to this version was layers. We called them floating selections in those days, or (unfortunately) floaters for short. John Derry came up with the Portfolio concept and I implemented it. We also pioneered grouping, painting on layers and their masks, and a palette to access the layers. This was the first commercially-available program that featured this capability.

Almost simultaneously, veteran paint system pioneer Alvy Ray Smith produced a similar capability in his revolutionary Composer program from Altamira software. His was probably working before mine, so I would say that he gets the credit. Plus, he's a lot smarter than me.

Nonetheless, Fractal Design was advancing so fast and with the professional look and the quality of our product, it all made us look a lot bigger than we actually were.

So we needed an edge to complement this. And we found one: keeping it cool.

The paint can product packaging had already put the perception of Fractal Design solidly in the cool and innovative category. With Painter 2.0, we continued that.

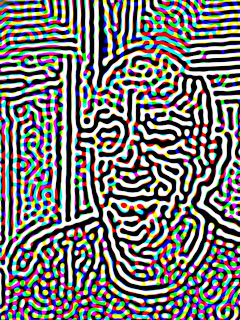

We needed to make sure that our corporate look was in resonance with the design community. So, John Derry and I consciously moved towards the cool side in Painter 2.0 with the So Hot So Cool campaign. You can see the artwork that John produced, reminiscent of a skateboard sticker, for Painter 2.0. A Burning Ice Cube. We also adorned the Painter 2.0 can with some of the art we compiled for this campaign.

The paint can product packaging had already put the perception of Fractal Design solidly in the cool and innovative category. With Painter 2.0, we continued that.

We needed to make sure that our corporate look was in resonance with the design community. So, John Derry and I consciously moved towards the cool side in Painter 2.0 with the So Hot So Cool campaign. You can see the artwork that John produced, reminiscent of a skateboard sticker, for Painter 2.0. A Burning Ice Cube. We also adorned the Painter 2.0 can with some of the art we compiled for this campaign.

Aside from including a burning ice cube sticker inside the Painter 2.0 can, the poster inside the can also had four renditions of a burning ice cube on it, and John and I were quite proud of our campaign. People loved our posters, and we often saw them posted on doors and office walls. For us, it was well worth the investment in word-of-mouth.

Yet, perhaps we went a bit too far. Karen Bria informed us that the east coast people weren't quite understanding the skateboard sticker theme. That perhaps it appeared to be too "California". John and I were actually amazed that they even noticed. We were happy that we could have an effect with our approach, but we learned from the event nonetheless. And never stopped creating new features.

Painter 3, released in November 1994, contained the following new features:

Painter 3, released in November 1994, contained the following new features:

- new drawer-based UI

- frame stacks: rotoscoping video

- onion-skinning for animation

- the Image Hose

- physical bristle modeling

- multiple undo

Image Hose was also new in Painter 3. This allowed you to draw with life. John Derry and I used the layer masking capability to get pieces of an image, say individual clovers, masked out. Then we could apply shadows to them individually. And finally structure them so you could draw with them and produce an endless array of clover. The brush became a generator of image: a literal hose of image data. This was profoundly more valuable than texture, because it brought structure to the brush.

I also got busy implementing multiple undo, bringing Painter up to modern standards. This modification stretched over three revisions of the product, because it was so complicated.

For Painter 4, released in November 1995, our features were:

- Shapes, so you can have layers of vector art

- net painter

- mosaics

- seamless pattern tiling

- reference layers - free transform

- web painter - GIF and JPEG formats

The mosaic feature actually came from my earlier work in Boolean polygon operations. This is an insanely difficult problem to solve. The reference layers feature allowed layers under transfer to continue to be under transform, and consequently rotatable and scaleable. This allowed the designer to try out a lot of possibilities without committing to one. It meant that small adjustments could take place without recursive re-sampling of the image, which degrades the image over time. This can be viewed as my entry into non-destructive editing. Which, of course, was the entire concept of Altamira Composer.

Seamless pattern tiling was a useful feature for this who needed to create web pages and backgrounds that tiled in various ways. You could paint into a tile and that made it possible to produce completely seamless artistic results.

Net Painter, of course, leveraged Painter's scripting capabilities. By this time, everything done in Painter was being recorded locally to the artist's machine in real time.

Painter 5 was released in June 1997, with the following new features:

Painter 5 was released approximately in sync with the merger with MetaTools, and it contained a veritable wealth of new brushes and layers. The concept of non-destructive editing was taken to the extreme by having layers you could dodge and burn into, layers that refracted the data below them, and even liquid metal layers.

Painter 5's theme was A Monument to Creativity. For this I created the Mount Brushmore image. The process of ideation for the Painter 5 logo form and ad concepts is detailed in Creativity and Painter, Part 4.

Painter 6, released in September 1999, had a set of new features that were quite brush-oriented:

Seamless pattern tiling was a useful feature for this who needed to create web pages and backgrounds that tiled in various ways. You could paint into a tile and that made it possible to produce completely seamless artistic results.

Net Painter, of course, leveraged Painter's scripting capabilities. By this time, everything done in Painter was being recorded locally to the artist's machine in real time.

When it came to marketing, we weren't running out of ideas at all. And because of the mosaics feature, we decided to play on history a bit, using the Painter Through the Ages theme. For this I created a beautiful mosaic frame for the poster, The Miracle of the Paint Can. John Derry created a beautiful image, reminiscent of Vermeer, in which Priscilla Shih (now Priscilla Cinque) posed for the image.

And the Painter Power Palette Picker was a powerful tool and an innovative bit of material to accompany the poster and the manual. That was definitely cool.

- impasto paint

- liquid metal paint

- refracting water droplet paint

- dynamic layers - non-destructive editing (lens layers, dodge and burn layers, torn edges, etc.)

- photo brushes

- gooey brushes

|

| Winter of Love 3 Poster Artist: John Derry |

Painter 5's theme was A Monument to Creativity. For this I created the Mount Brushmore image. The process of ideation for the Painter 5 logo form and ad concepts is detailed in Creativity and Painter, Part 4.

- next-generation multi-bristle brush engine

- load brushes with multiple colors

- leaner, clearer UI

- life-like natural spray airbrushes

- Interactive Image Hose, allowing changes to scale and rotation in real time

- Painting with patterns, neon, tubes, and gradients

- responsive palette knife

These led us to take an approach that featured brushes. Because Only Painter could do these things. This was less cool and more of a preservationist attitude. In response to products imitating Painter's capabilities. In response to Photoshop duplicating Painter's brushwork.

Painter 7 had a set of new features that I worked on as well, in the form of a consultant working for Corel:

- liquid ink

- watercolor version 2 (much more realistic)

- animated absorption of pigment

- woodcut effect