A cohesive design is almost always made up of separate pieces.

Interrelationships Between Pieces

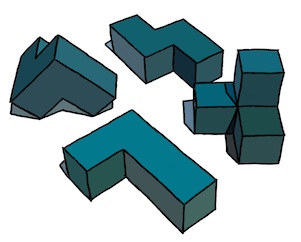

Interrelationships Between PiecesAlso, the relationship between the pieces is key. In organization, there are requirements for one department that are produced by another department. In development, one module produces a result that is used by one or more other modules. In three-dimensional objects, the objects can fit together like a dovetail joint.

In a drawing, the pieces can be shaded to fully reveal their form. They can shadow other pieces to show their inter-positioning. When you see a drawing, it can make you think about how the figures in the drawing are placed, and what message is intended by the artist. In a still-life this may be of little consequence. In an Adoration of the Magi, this can be of great consequence.

Cycles

CyclesThe interconnection of pieces can be cyclic, producing an induction. This cycle should be essential to the concept of the design. In programming, the loop should be essential to the working of the program, an iteration that converges on a desired result.

In a drawing, the interrelationship becomes essential to the piece as well, as indicated by this impossible triangle, copied loosely from Oscar Reutersvärd, the Swedish artist. Sometimes we can highlight something different than what was originally intended, as in this case: we indicate how the figure can be made of three L-bends that mutually depend upon each other. Impossible figures often make an excellent illustration of cyclic structures.

Also, though, looking at cycles in different ways can reveal to us more about the problem than we originally knew.

Development In Pieces

Development In PiecesIn development, we first conceive of a problem to solve and then sketch out a structure of how we will solve it. Then it helps to divide the problem into pieces. It suits us best if each piece is well-defined. We know its inputs, its results, and how it will produce them. When a piece is too complex, we can divide it up into smaller pieces.

The nature of each piece can then be worked on individually. Either sequentially by one person, or concurrently by multiple people in a workgroup. Because each piece of the problem has a different nature, this lends itself to specialization, which is suited to modern workgroups. Each piece can then be tracked separately. The interrelationship between the pieces will need to be known by the manager to properly chart the progress of the development.

Most large projects are done this way. When they are done by one person, then that person needs to understand the workings of the project as a whole, and this can lead to a huge, unmanageable situation. But not always. When a problem gets too large for one person, the pieces of the problem lend themselves to adding extra people to help, and so project division is essential to minimizing unpredictable schedules.

When Pieces Fail To Connect

When Pieces Fail To ConnectWhen conceptualizing the division of a project into pieces, it is sometimes not possible to foresee each and every wrinkle in the workings of each of the pieces. This can lead to a situation where a piece can not be constructed or where some pieces can't be connected properly.

It is times like these when it's important to stand back, take stock of what you have learned, and integrate that into the design. Sometimes this necessitates a redivision of the project into new pieces. Sometimes the redivision only affects a few neighboring pieces. This is part of the art of project design.

Development Strategies

The pieces of a project represent the result of top-down decomposition, which usually works as a division process. Once you have a project split into pieces, and the pieces implemented, then it becomes a problem of making sure that each piece works as it should.

This entails isolation of the piece, testing its inputs, and validating its results.

In a workable system, it is essential to be able to view the intermediate results of each piece. In a graphics system, this means literally viewing them on a screen to visually verify that the result is correct. And sometimes, the ability to view each minute detail is also required.

In a system that is constructed in pieces, one problem which is presented to the authors is this: how can we add a new feature or behavior to the project. This is important because usually it is necessary to construct a simplified version of the project and then make it more complex, adding features, until it is complete.

A useful capability is this: build a simplified version of a piece for testing with the other pieces. Then, each developer can work with the entire project and flesh out their piece independently. Or, even better, a new version of the piece can be checked in, adding essential capabilities, while more complex behavior gets worked on independently.

Performing the Division

Performing the DivisionI mentioned top-down decomposition as a useful tool in dividing up a project into pieces. But this must be tempered with other considerations. For instance, the necessity that each piece do exactly what it needs to do, no more and no less. Another example is the requirement that the inner loops be as simple as possible, necessitating the factoring of extraneous and more complex cases out. This means that the subdivision must be judicious, to achieve local economy within each piece. I have been on many projects where this goal was a critical factor in deciding how to divide the problem up into pieces. This can also serve as a razor which cuts away inessential parts, leaving only a minimal interconnection of pieces.

You also want to make sure the project is organized so that, if a piece fails, we can directly verify this by turning it on and off, and seeing the result of its action and the effect of it on the entire result. This is particularly useful when each piece is a pass of the total process, like in a graphical problem, or in a compiler.

Also, it is useful to construct a test harness that contains UI so that each piece can be independently controlled, preferably with real-time adjustment. This is a great way to exercise the project. I have used this many times.

Taking Stuff Apart

Moving from development to three-dimensional construction, the disassembly process can reveal a tremendous amount about the problems encountered in producing the object, device, or mechanism. When I was a kid, I liked to take things apart. Of course, putting them back together took a bit longer.

In modern times, there are entire companies that specialize in taking gadgets apart, and even slicing open chips to reveal their inner workings. This is the process of reverse-engineering. Examples of companies that do this are chipworks.com and iSuppli.

Gadgets

I was going do do a section on gadgets and the pieces thereof, but I realized that my knowledge of such things is really not up for grabs, nor is it for public consumption.

It's really too bad since gadgets are a classic example of how each part needs to do as much as possible with as few resources as can be spared. This is one of the basic design decisions that govern the division of a project.

Often the most remote considerations suddenly become of primary importance in the division process.

Code

A friend wishes to divide up code in such a way that module authorship can be retained and the usage monitored so royalties can trickle in the proper way back to the source. Very distributed-economy. This reminds me of the App market in a way, and I'll tell you why.

In early days of software, there was much custom software that cost huge amounts of money. There were accounting systems and mainframes. These would often cost a hundred thousand dollars. The CAD systems I worked on in the 70s were very expensive as well, and specialized software, such as all-angle fracturing software, could cost plenty. It's funny how big business still maintains this model, with distributed systems still costing lots of money. This will certainly be replaced by a distributed app-based model. Some believe that the gadgets are only the front end to a giant database. This model will be replaced by the cloud model.

In the 80s, personal computers' penetration increased and software became a commodity that was sold on the shelves of computer stores. This drove the average price down to hundreds of dollars, but some software still could command up to a thousand dollars. Consider Photoshop and the huge bundles of software that have become the Creative Suite. As time went by, lots of software was forced into bundles in what I call shovelware: software that comes with too much extraneous stuff in it, to convince the buyer that it is a wonderful deal. I'm thinking of Corel Draw! in those days. Nowadays, sometimes computers are bundled with crapware, which is the descendent of shovelware.

The commoditization of software was just a step in the progress of applications. Now, applications are sold online for the most part, even with over-the-air delivery. This is because much computing has gone mobile and desktop usage is on the decrease. Many desktops have in fact been replaced by laptops, which was one step in the process.

But the eventual result was that software is now sold for a buck and the market has consequently been widened to nearly everyone.

But the eventual result was that software is now sold for a buck and the market has consequently been widened to nearly everyone.To do this, the software had to become easier. The model for the use of the software had to become easier. The usefulness of an application had to become almost universal for this to occur and for applications to become more finely grained. Apps now sell for anywhere from free to ten bucks. But on the average, perhaps a complex app will cost a piddling two dollars.

Is it realistic for the remuneration of code authorship to also go into the fine-grained direction from the current vanguard of open-source software? Nowadays, many app authors receive royalties for their work. The market for applications has exploded and the number of app designers has also exploded: widely viewed as the democratization of programming. This is the stirring story of how app development penetrated the largest relevant market. Can the programmers themselves become democratized?

The applications of today live in a rich encomium of capabilities that include cameras, GPS, magnetic sensor, accelerometers, gyros, and so much more. For code itself to go down a democratization path, I expect that the API it lives under will have to be just as rich.

Unfortunately, the API is owned by the platforms. And even, as in the case of Java (as we have found out this last week), by the company that bought it (Oracle). Apparently an API can be copyrighted, which is a sticky wicket for Google. The vast majority of apps are written for iOS today. But, if this won't be true forever, then at least it has clearly indicated how to create an incredibly successful business model around applications. And it indicates that APIs will certainly be heavily guarded and controlled.

The spread of technology is never as simple as entropy and thermodynamics, though the concepts may certainly bear on the most profitable use case.

Either way, the democratization of code could possibly solve the litigation problem, at least when it comes to applications built on top of APIs, because the new model might in some sense replace the patent model by reducing ownership to a revenue stream, democratizing software developers. But the APIs could not be a part of this solution as long as the platform developers considered them to be proprietary.

So, in the end, I don't think system software can be a client for this model. Unless its the GNU folks.

No comments:

Post a Comment